Our brains have evolved to recognize and remember faces. As infants, one of the first things we learn is to look at the faces of those around us, respond to eye contact and mimic facial expressions. As adults, this translates to an ability to recognize human faces better and faster than other visual stimuli. We’re able to instantly identify a friend’s face among dozens in a crowded restaurant or on a city street. And we can glean whether they’re excited or angry, happy or sad, from just a glance.

The ease of recognizing faces masks its underlying cognitive complexity. Faces have eyes, noses and mouths in the same relative place, yet we can accurately identify them from different angles, in dim lighting and even while moving. Brain-imaging studies have revealed we evolved several tiny regions the size of blueberries in the temporal lobe—the area under the temple—that specialize in responding to faces. Neuroscientists call these regions “face patches”. But neither data from brain scanners—functional magnetic resonance imaging—nor clinical studies of patients with implanted electrodes have explained exactly how the cells in these face patches work.

Now, using a combination of brain imaging and single-neuron recording in macaques, biologist Doris Tsao and her colleagues at Caltech have finally cracked the neural code for face recognition. The researchers found the firing rate of each face cell corresponds to separate facial features along an axis. Like a set of dials, the cells are fine-tuned to bits of information, which they can then channel together in different combinations to create an image of every possible face. “This was mind-blowing,” Tsao says. “The values of each dial are so predictable that we can re-create the face that a monkey sees, by simply tracking the electrical activity of its face cells.”

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Previous studies had hinted at the specificity of these brain areas for targeting faces. In the early 2000s, as a postdoc at Harvard Medical School, Tsao and her collaborator electrophysiologist Winrich Freiwald, obtained intracranial recordings from monkeys as they viewed a slide show of various objects and human faces. Every time a picture of a face flashed on the screen, neurons in the middle face patch would crackle with electrical activity. The response to other objects, such as images of vegetables, radios or even other bodily parts, was largely absent.

Further experiments indicated neurons in these regions could also distinguish between individual faces, and even between cartoon drawings of faces. In human subjects in the hippocampus, neuroscientist Rodrigo Quian Quiroga found that pictures of actress Jennifer Aniston elicited a response in a single neuron. And pictures of Halle Berry, members of The Beatles or characters from The Simpsons activated separate neurons. The prevailing theory among researchers was that each neuron in the face patches was sensitive to a few particular people, says Quiroga, who is now at the University of Leicester in the U.K. and not involved with the work. But Tsao’s recent study suggests scientists may have been mistaken. “She has shown that neurons in face patches don’t encode particular people at all, they just encode certain features,” he says. “That completely changes our understanding of how we recognize faces.”

To decipher how individual cells helped recognize faces, Tsao and her postdoc Steven Le Chang drew dots around a set of faces and calculated variations across 50 different characteristics. They then used this information to create 2,000 different images of faces that varied in shape and appearance, including roundness of the face, distance between the eyes, skin tone and texture. Next the researchers showed these images to monkeys while recording the electrical activity from individual neurons in three separate face patches.

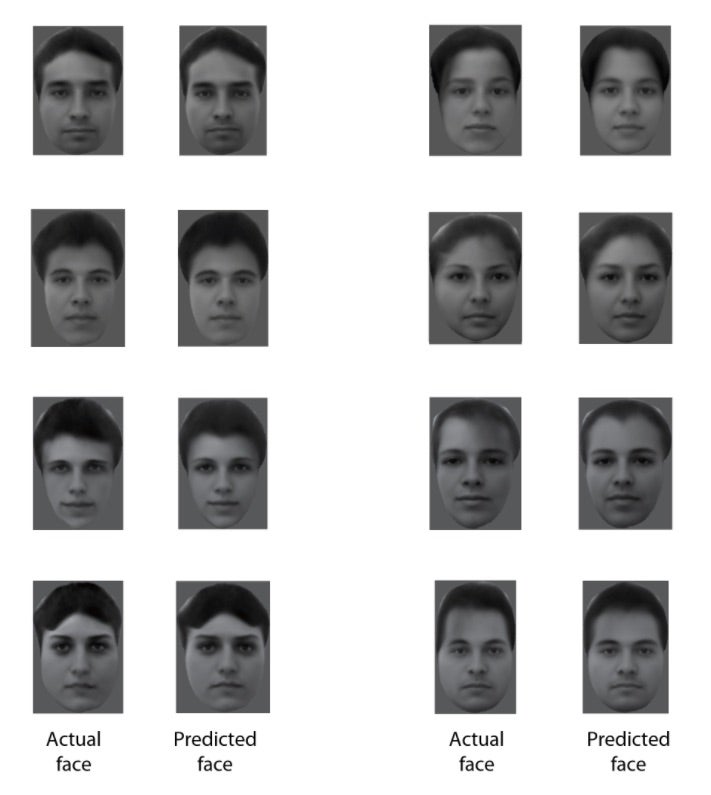

Eight real faces presented to a monkey contrast with reconstructions made by an analysis of electrical activity from 205 neurons recorded while the monkey viewed the faces. Credit: Doris Tsao

All that mattered for each neuron was a single-feature axis. Even when viewing different faces, a neuron that was sensitive to hairline width, for example, would respond to variations in that feature. But if the faces had the same hairline and different-size noses, the hairline neuron would stay silent, Chang says. The findings explained a long-disputed issue in the previously held theory of why individual neurons seemed to recognize completely different people.

Moreover, the neurons in different face patches processed complementary information. Cells in one face patch—the anterior medial patch—processed information about the appearance of faces such as distances between facial features like the eyes or hairline. Cells in other patches—the middle lateral and middle fundus areas—handled information about shapes such as the contours of the eyes or lips. Like workers in a factory, the various face patches did distinct jobs, cooperating, communicating and building on one another to provide a complete picture of facial identity.

Once Chang and Tsao knew how the division of labor occurred among the “factory workers,” they could predict the neurons’ responses to a completely new face. The two developed a model for which feature axes were encoded by various neurons. Then they showed monkeys a new photo of a human face. Using their model of how various neurons would respond, the researchers were able to re-create the face that a monkey was viewing. “The re-creations were stunningly accurate,” Tsao says. In fact, they were nearly indistinguishable from the actual photos shown to the monkeys.

Even more astonishing was the fact they only needed readings from a small set of neurons for the algorithm to accurately re-create the faces monkeys were viewing, Tsao says. Recordings from just 205 cells—106 cells in one patch and 99 cells in another—were enough. “It really speaks to how compact and efficient this feature-based neural code is,” she says. It may also explain why primates are so good at facial recognition, and how we can potentially identify billions of different people without needing an equally large number of face cells.

The findings, published June 1 in Cell, provide scientists with a comprehensive, systematic model for how faces are perceived in the brain. The model also opens up interesting avenues for future research, says Adrian Nestor, a neuroscientist who studies face patches in human subjects at the University of Toronto and also did not participate in the research. Understanding the facial code in the brain could help scientists study how face cells incorporate on other identifying information, such as gender, race, emotional cues and the names of familiar faces, he says. It may even provide a framework for decoding how other nonfacial shapes are processed in the brain. “Ultimately, this puzzle is not just about faces,” Nestor says. “The hope is that this neural code extends to object recognition as a whole.”